The AI Argument: Why Pushback Makes Better Content

I recently had an extended “conversation” with an AI system while working on a piece of political commentary about a controversial current event. What started as frustration—the AI initially declined parts of my request, citing concerns about factual accuracy—evolved into a productive collaboration that yielded a far stronger final product than my original vision.

The interaction was instructive. I accused the AI of political bias when it pushed back on my framing. The AI made several factual errors that I had to correct. We went back and forth, with me providing direct quotes and evidence, the AI conducting additional research, and both of us refining the work through multiple iterations. By the end, we’d produced something more accurate, more thoroughly documented, and ultimately more forceful than what I’d initially requested.

That conversation revealed something important: working with AI systems effectively requires understanding that they’re neither magic answer machines nor simple tools that execute commands. They’re collaborative research partners with specific strengths and limitations, and getting the best results requires knowing how to work with them rather than simply directing them.

This guide distills the principles that emerged from that interaction—lessons that apply whether you’re working on political analysis, academic research, business writing, investigative journalism, or any other domain where accuracy and persuasiveness both matter. AI systems are powerful tools for research, analysis, and content creation, but they work best through collaborative iteration rather than simple command-and-response.

Core Principles

1. Expect Initial Pushback on Factual Claims

When an AI system questions or declines part of your request, it’s often flagging potential accuracy issues rather than opposing your goals. This isn’t obstruction—it’s the system trying to protect the credibility of your final product.

What to do: Don’t interpret caution as bias. Ask yourself whether the AI has identified legitimate factual concerns that could undermine your argument.

2. Provide Sources and Direct Evidence

AI systems work from training data with knowledge cutoffs and can make errors. When you have access to primary sources—direct quotes, recent news articles, official statements—providing these dramatically improves output quality.

What to do: If the AI seems uncertain or incorrect about facts central to your request, supply direct quotes, links, or specific information. This gives the system the ground truth it needs.

3. Correct AI Errors Assertively

AI systems make mistakes—understating numbers, mischaracterizing situations, or missing recent developments. When you catch an error, state it directly and provide the correct information.

What to do: Don’t assume the AI’s initial response is definitive. If something seems wrong, challenge it. Say “You’re incorrect about X—here’s what actually happened.” The system will adjust based on better information.

4. Distinguish Between Accuracy Concerns and Political Bias

When an AI insists on factual accuracy, this isn’t the same as taking a political position. A system that refuses to state speculation as fact, that wants direct quotes before making attributions, or that distinguishes between disputed and established facts is trying to make your argument stronger, not undermine it.

What to do: Separate the AI’s concern for accuracy from its stance on your underlying argument. An AI can forcefully advocate for your position while insisting that position be built on verifiable facts.

5. Use Iteration to Refine and Strengthen

The most powerful results come from back-and-forth refinement. Initial drafts may lack specific details, miss important context, or fail to emphasize key points. Each round of feedback improves the output.

What to do: Don’t settle for the first draft. Add new information, request additional research, ask for sections to be expanded or refocused. Each iteration can incorporate new facts and sharpen the argument.

6. Leverage AI’s Research Capabilities

AI systems with web search can find current information, verify claims, and discover supporting evidence you might not have. But they need direction about what to search for.

What to do: If the AI seems to lack crucial context, ask it to search for specific information. Direct it toward the gaps in its knowledge rather than assuming it has all relevant facts.

7. Recognize That AI Doesn’t “Change Its Mind”

AI systems don’t have opinions that you convince them to abandon. They operate on the information available to them. When an AI’s response shifts after you provide new information, it’s not that you “won an argument”—it’s that you supplied data the system didn’t have.

What to do: Frame your corrections as providing information rather than winning a debate. The goal is collaborative accuracy, not persuading the AI to switch sides.

8. Demand Specificity and Action Items

Vague AI outputs are less useful than concrete, specific recommendations. If you’re working on analysis or advocacy, push the AI to provide actionable details rather than general observations.

What to do: Ask for specific quotes, concrete policy recommendations, detailed explanations, and clear action steps. Request that abstract points be grounded in verifiable facts and particular examples.

The Collaborative Process in Practice

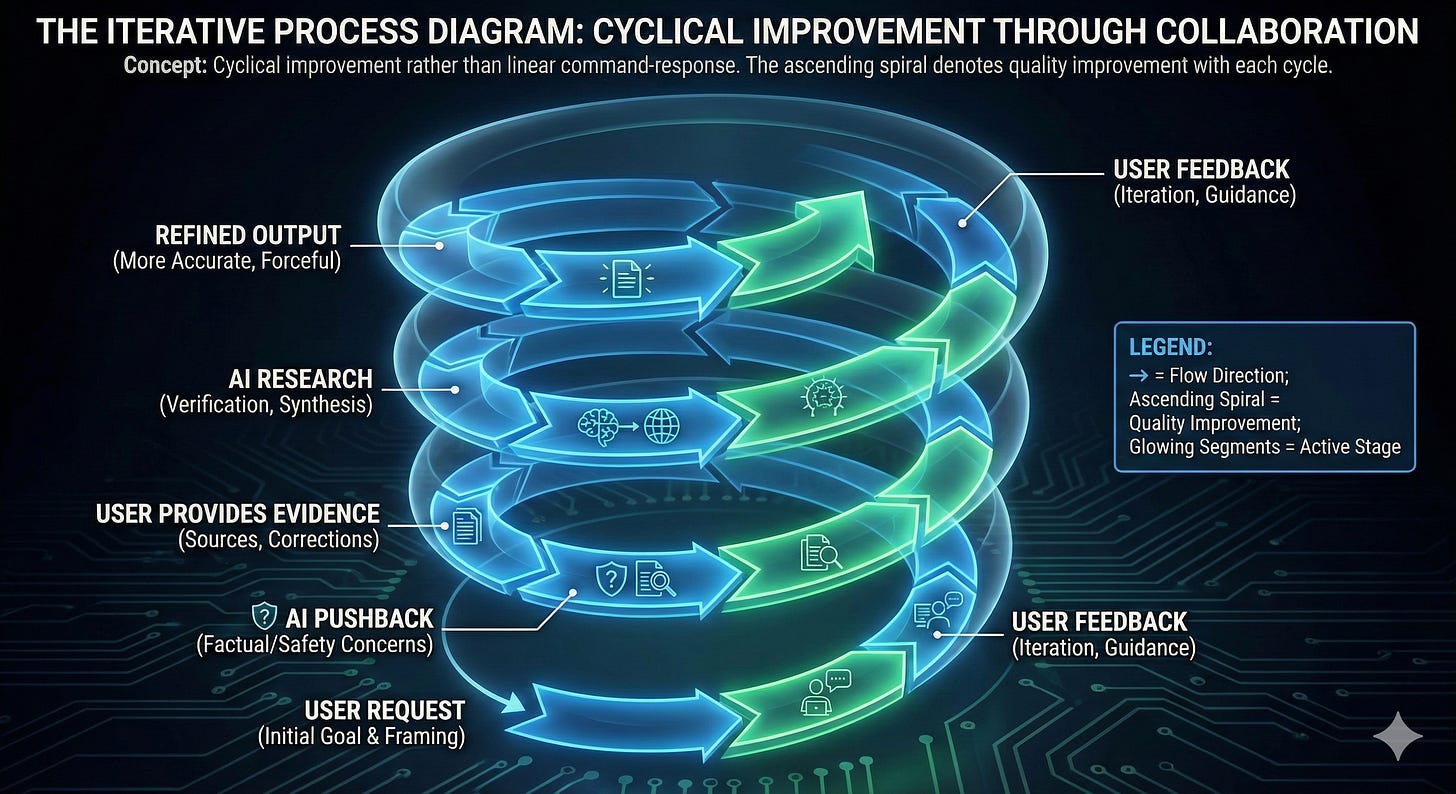

Effective AI interaction follows a pattern:

Initial Request: You provide your goal and initial framing

AI Response: The system identifies what it can do, what concerns it has, and what additional information it needs

User Correction: You provide missing facts, correct errors, and clarify priorities

AI Research: The system searches for additional context and supporting evidence

Iterative Refinement: You request changes, additions, or shifts in emphasis

Final Product: The result is more accurate and forceful than either party could have produced alone

Red Flags in AI Responses

Be concerned if an AI:

Makes definitive claims about recent events without searching for current information

States speculation as established fact

Attributes quotes without direct sources

Refuses to search for verifiable information when asked

Accepts your framing without questioning potentially inaccurate elements

These behaviors suggest the system is prioritizing compliance over accuracy, which will weaken your final product.

The Goal: Collaborative Accuracy

The most effective use of AI systems treats them as research and writing partners that:

Challenge you to provide better evidence

Search for supporting information

Catch logical inconsistencies or factual errors

Demand that strong arguments be built on solid foundations

The result of this collaborative process is content that’s both more forceful and more credible—advocacy built on facts, analysis grounded in evidence, and arguments that can withstand scrutiny precisely because they’ve been tested through the process of creation.

Conclusion

Working effectively with AI isn’t about getting the system to agree with you—it’s about leveraging its capabilities for research, fact-checking, and synthesis while providing the context, corrections, and direction it needs to produce accurate, powerful results. The best outputs come from users who treat AI as a demanding collaborator rather than a compliant assistant.

When an AI pushes back, provide better information. When it makes errors, correct them assertively. When it seems uncertain, direct its research. The friction in the process isn’t a bug—it’s a feature that produces stronger, more credible final products.

This space isn’t meant to be a monologue. It’s a dialogue — sharp, satirical, and sometimes uncomfortable. Do you agree with the stance? Disagree with the framing? See illusions I’ve missed? Add your voice. Comment, challenge, expand, dismantle. The sharper the exchange, the clearer the vision. Disillusionment isn’t passive; it’s participatory.

Join us on our podcast Specifically for Seniors, where satire meets substance and storytelling sparks civic engagement. Each episode dives into topics like authoritarianism, political spectacle, environmental justice, humor, history and even fly fishing and more—layered with metaphor, wit, and historical insight. We feature compelling guest interviews that challenge, inspire, and empower, especially for senior audiences and civic storytellers. Listen to the audio on all major podcast platforms, watch full video episodes on YouTube, or explore more at our website.

Let’s keep the conversation sharp, smart, and unapologetically bold.